In American Prometheus: The Triumph and Tragedy of J. Robert Oppenheimer, Kai Bird and Martin J. Sherwin’s Pulitzer Prize-winning biography and basis for Christopher Nolan’s OPPENHEIMER, one word comes up time and time again: naïve. Indeed, in 1953, while having lunch with the Director of the Marshall Plan, the “father of the atomic bomb” admitted he “didn’t understand to this day why Nagasaki was necessary…”

It is, perhaps, cold comfort today that the reasons for dropping a second atomic bomb are clear enough to anyone who has read more than a basic U.S. History textbook. Ignoring Stalin’s promise at Yalta and his cryptic equanimity five months later at Potsdam; disregarding mokusatsu, foreknowledge of Soviet invasion dates, and Japan putting out feelers for conditional surrender with Russia; even looking past showing the Soviets—in no uncertain terms—that we had gotten the bomb first, an explanation for why Nagasaki was “necessary” is as simple as it is sad: The Manhattan Project created two types of bomb, but only tested one; the U.S. military wanted to see if both worked.

Even the great visionaries who shape the raw stuff of time and place into what can be called history can prove rather myopic. “Genius is no guarantee of wisdom,” poses Lewis Strauss, the film’s chief antagonist, “How could this man who saw so much be so blind?” Oppenheimer, touched with grave certitude by the words of Niels Bohr, had sought a means to end all war. Instead, he would see into the world war without end. The Nuclear Age had only just begun and already he found himself looking at a post-nuclear world.

A parallel can be found in one Fritz Haber.

By the late 1800s the world was facing a seemingly insurmountable problem as improvements made to agriculture during the Industrial Revolution had allowed its population to grow beyond what could be sustained by natural sources of fertilizer. Global famine threatened hundreds of millions of lives. In Germany alone, some 20 million people faced starvation.

German, Jew, patriot, in 1918 Haber won the Nobel Prize in Chemistry for his part in the Haber–Bosch process, by which atmospheric nitrogen could be captured as ammonia (NH₃) for use as fertilizer. He became known as “the man who pulled bread from the air.” In the little more than a century since, Earth’s population has exploded from what was seen then as a hard cap of 1.5 billion to an unimaginable eight. Today, half of the world’s food base is dependent on the Haber–Bosch process—and half of the nitrogen found in the average human body is of synthetic origin. Soon after its invention, however, his process would be repurposed from one that gave life to one that took it.

On August 1, 1914, one year after the BASF plant (Badische Anilin- und Sodafabrik, the world’s largest chemical producer) went online in Oppau, Germany was drawn into war. Blockaded, surrounded by the Triple Entente, and with no nitrate (a necessary component of gunpowder) resources of its own, Germany found itself in an untenable position. By the end of November and the First Battle of Ypres, Germany would suffer as many casualties as Belgian, British, and French forces combined.

Eager not just to keep Germany in the fight but to win it, Haber reasoned that Oppau’s daily output of 20,000 metric tons of ammonia could be used to produce not only nitric acid (a precursor to nitrate) on an industrial scale, but formidable chemical weapons as well. Ever the scientist, Haber would personally oversee the first use of chlorine gas at the Second Battle of Ypres, where favorable winds would carry a 168-ton, greenish-yellow cloud of gas over Allied lines, killing some 1,100 soldiers not in days, not in hours, but minutes. Clara Immerwahr, who had spoken out against her husband’s “perversion of the ideals of science,” would commit suicide at a party honoring him less than two weeks later.

Predictably, though the Brits decried it as a “cynical and barbarous” “dirty weapon,” and a “cowardly form of warfare,” poison gas would soon be visited upon his countrymen. By war’s end, Allied forces would use more gas than Germany, and history’s first weapon of mass destruction would kill nearly 100,000 people and wound a million more. Ultimately, Haber’s efforts would cause The Great War to drag on for another three years, resulting in millions of additional casualties (including the partial blinding of a young artist named Adolf), and “the man who pulled bread from the air” would also come to be known as the “father of chemical warfare.”

After the war, Haber returned to his work in agriculture, and helped to create a particularly effective cyanide-based insecticide. That a bug spray would lead to the development of Zyklon B and find use in the extermination of more than a million Jews—of whom some were his own family—is an irony so cruel one must hope it could not have been foreseen.

In another such twist of fate, of the hundreds of thousands killed by the weapon developed to defeat the Nazis, more than 95 percent would be civilians. As for the question that troubled Oppenheimer so, the answer is one he could have never known: On the morning of August 9, 1945, Nagasaki was bombed because Kokura, the site of western Japan’s largest weapons factory, fell under cover of clouds.

If only there had been some kind of sign

The Fall, Pandora, Prometheus… our oldest stories warn us: “You can’t lift the stone without being ready for the snake that’s revealed.” If history seems intent on repeating itself perhaps it is because we keep failing to learn this lesson. Every time man reaches and pulls something from the air, something else comes with it. And when the military is cutting the checks, well, every breakthrough, every promise of a better tomorrow is one there may be none at all.

December 10, 1945, two months after Oppenheimer resigned as director, the world’s first digital computer, a 30-ton behemoth dubbed ENIAC (Electronic Numerical Integrator and Computer), was switched on at Los Alamos—and immediately put to work on the hydrogen bomb.

1959 saw the debut of the U.S.’ first ICBM, the Atlas D, and the first nuclear-capable submarine, as well as the integrated circuit. Costly, with no clear purpose, the microchip may not have gone anywhere had the Air Force not decided to use hundreds of thousands of them in production of the Minuteman II. GPS was developed for ICBM guidance, too, born out of the USAF doctrine of HADPB (high-altitude, daylight, precision bombing). Even the internet owes its existence in part to the Department of Defense wanting a network capable of surviving nuclear war.

On the other hand, what a film like OPPENHEIMER might be called, a blockbuster, was originally a quite-literally-named bomb used in WWII. The microwave (a byproduct of RADAR development made commercially available by Raytheon), “nukes” food. Hell, the bikini was unveiled four days after the first nuclear test at the Bikini Atoll. And just how many things are “the bomb,” anyway?

New normals. Business as usual. “The more things change, the more they stay the same.” Maybe it’s a bit of that good ol’ American Optimism, maybe it’s just naïveté, but the point is we’re pretty good at looking past the horror so long as we have something to distract ourselves with. So, while Japan got Gojira, we—speeding ever toward the brink—got Spider-Man and Iron Man.

Trinity was the most important moment in human history. But, as OPPENHEIMER so cogently stresses, we “took a wrong turn.” The tremendous energy to be found in the heart of the atom was always there, waiting. We found it. We could have made it accessible and affordable to all (even Strauss, that villain, championed peaceful uses of atomic power, believing it would one day make electricity “too cheap to meter”), but nuclear reactors were—are—seen as too costly; they would never turn a profit. Instead we mortgaged our future on coal and oil. And even as we eased into a long, decadent slide, we continued to pursue our most pathological, our most thanatotic impulses, amassing more and more powerful weapons at the expense of just about everything else. (In 1998, The Brookings Institution found that from 1940 to 1996 the U.S. spent nearly $5.5 trillion on nuclear weapons. In 2023, that number would be $10.7 trillion.)

At the height of the Cold War, more than 60,000 nuclear weapons existed. Today that number is between 12 and 13,000 (still more than enough to end all life several times over; not to mention the, say, theoretical weapons so vile the use of a single device could salt the very Earth) and, though a nuclear holocaust is now seen as “very unlikely,” other threats have risen in its stead. As it stands, around nine million people starve to death each year. As the effects of climate change compound exponentially, with “extreme weather events” occurring so frequently they can no longer rightly be said to be extreme, several million more face hunger with every year that passes. As we draw inexorably nearer another point of no return, we will come to see more than three-billion lives—40 percent of the world’s population—become “highly vulnerable” to this change. Over a billion will be displaced as “climate refugees.” This is to say nothing of attendant conflicts.

Has anyone considered nuclear winter as a solution to global warming?

All of this is happening as we rapidly approach another singularity: AI.

Less than one month after Japan’s formal surrender on September 2, 1945, the official end of WWII, Commanding General of the Army Air Force H. H. Arnold met with top minds from the Douglas Aircraft Company on October 1 to establish Project RAND (as in Research and Development). In 1948, RAND was incorporated as a nonprofit in order “to further and promote scientific, educational, and charitable purposes, all for the public welfare and security of the United States of America.”

In “Computing Machinery and Intelligence”, Alan Turing, cracker of the Enigma code, eater of the poisoned apple, asked the question: “Can machines think?” The term “artificial intelligence” was coined six years later, in summer of 1956, at the Dartmouth Summer Research Project on Artificial Intelligence conference. There Allen Newell, Cliff Shaw, and Herbert Simon presented Logic Theorist, the first computer program designed to simulate human problem-solving ability—the first artificial intelligence program. Logic Theorist was funded by the RAND Corporation.

DARPA, the R&D arm of the DOD that gave us the internet, began funding AI research in 1963.

Like nuclear power, AI is inherently, from inception, military technology. And just like nuclear power, AI will revolutionize the world—for better or worse. As with climate change, what we see of it may first seem rather innocuous; most won’t notice what’s happening to the world around them until it’s right outside their window. And, though we’ll get to ride around in driverless cars and watch AI-generated Choose Your Own Adventure-style content, one way or another, we—us second sheep—will be made to foot the bill. So while the naïfs wring their hands and clutch their pearls over the moral implications of using ChatGPT to write school papers and cover letters, the questions of what happens when half of everyone is out of a job or a video of a nuclear strike on the White House is released go unanswered. Fomenting violence is easy enough as it is; a Deepfake of a political or religious leader declaring open season on an out-group presents no more of a challenge than putting an actress’ face on a pornstar’s body.

Released after THE DARK KNIGHT RISES, which features Nolan’s first use of a nuke as a plot device, and sandwiched around DUNKIRK, a WWII film, are two films which deal with physics and climate change, INTERSTELLAR concerns mankind’s quest to leave a blighted Earth in the face of starvation, having to reconcile general relativity with quantum mechanics (and not “drop bombs from the stratosphere onto starving people”) in order to do so. In the more brash spectacle of TENET there is (along with a conversation about The Manhattan Project and Oppenheimer) a practically whispered line which reveals why people from the future would want to destroy our present: “Because their oceans rose and their rivers ran dry. Don’t you see?… We’re responsible.”

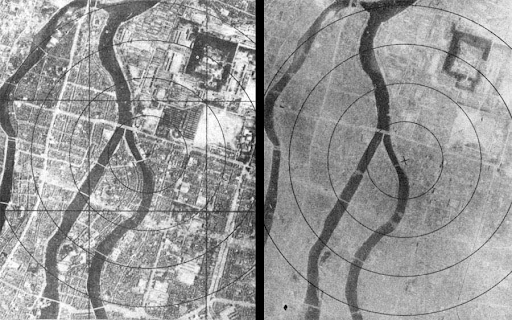

Hiroshima: Before and After

Nuclear and climate anxiety pervade Nolan’s filmography, as does a preoccupation with technology, genius, and burden. And though a certain ambivalence is clear, so is a prevailing faith—not necessarily in technology or in the people behind it, but in those who must do the hard thing, the right thing. People like Leo Szilard and David Hill. Heroes, in a word.

In this respect, OPPENHEIMER is Nolan’s Trinity. But like that most profound crucible, it is as naïve to think that a three-hour-long biopic about one of history’s most polarizing figures, even as part of a broader pop culture phenomenon like “Barbenheimer,” can “save cinema” as it is to think—hope—an atom bomb or any other piece of hardware with the potential to save the world actually will. We simply can’t count on the people with their fingers on the buttons to make the right call (which, ironically—sadly—is part of the reason AI was developed in the first place). Just look at “Barbenheimer”: Hollywood was scrambling for its next cash cow even before the superhero teat began to sour. Now that BARBIE has gone on to become not only the top-grossing film by a female director but Warner Bros. top-grossing film, Hollywood will take away from this moment what it always has. Already Mattel has announced plans to launch their own “Mattel Cinematic Universe” with 14 more movies based on toys. So instead of more sobering reflections on triumph and tragedy or the elevation of more female voices we can expect to see Polly Pocket and… UNO starring Lil Yachty.

In a recent interview, Nolan spoke of Robert Oppenheimer’s enduring relevance as well as the dangers of AI: “When I talk to the leading researchers in the field of AI right now, for example, they literally refer to this right now as their Oppenheimer moment. They’re looking to his story to say ‘Okay, what are the responsibilities for scientists developing new technologies that may have unintended consequences?’”

The interview concludes with Nolan saying that AI presents “a terrifying possibility. Not least because, as AI systems go into the defense infrastructure, ultimately they’ll be in charge of nuclear weapons. And if we allow people to say that that’s a separate entity… then we’re doomed. It has to be about accountability. We have to hold people accountable for what they do with the tools that they have.”

Comments